Blog 5: Giving Voice to the Past: How AI Helps Us Listen

By Dr. Diego Rincon Yanez

One of the key challenges in the VOICES project is data heterogeneity. The collections we are studying focus on the lived experiences of women, including legal disputes, wills, land ownership, female moneylending, and much more. These are geographically scattered, some of them are not digitised, and they are written in early modern English, which makes them difficult to read. To make matters worse, there is no consistency of spelling, grammar and syntax. To tackle these sources effectively, we turn to Artificial Intelligence with a human-in-the-loop approach — meaning historians and computer science researchers guide the process and verify the outputs at every step.

From Manuscript to Meaning

Understanding the voices of the past starts with the physical evidence. Historical researchers at VOICES begin by identifying manuscripts that shed light on the daily lives of ordinary women in early modern Ireland. Currently, VOICES has identified over thirteen (13) major archival collections; each of which allow us to explore patterns of violence, social connections, female agency, and the flow of money.

To do this at scale, we need some level of automation. This is where AI-powered tools, especially Optical Character Recognition (OCR), come in. These tools help convert scanned manuscripts into machine-readable text. For this task, we currently rely heavily on the Transkribus platform, which combines computer vision and annotation tools tailored to historical documents. Once the text is captured, historians review it to ensure a high-level of transcription accuracy. Then, Natural Language Processing (NLP) techniques are applied to analyze the text — to detect names, places, and events and so on.

The Evolution of NLP

NLP has changed dramatically over the past decade. In the early days, it relied on steps like lemmatising, stemming, stop-word removal, and similarity calculations. Today, cutting-edge NLP models are powered at foundational level by Deep Learning and Transformer models like BERT and its variants, which can “learn” patterns and context from vast datasets.

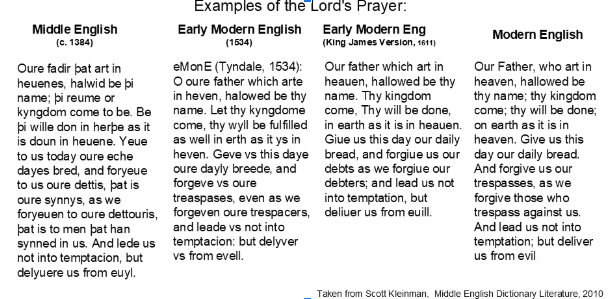

But even the most powerful model will struggle if it hasn’t seen examples like the data it is being asked to interpret. For VOICES, this is especially true: the manuscripts are written in early modern English, with spelling and grammar quite different from what AI models typically see in their training. Here is an example: a word that existed in early modern English may have evolved or disappeared entirely by the time we get to contemporary English — but it still carries the same meaning. Without training on those older forms, a model may miss the point entirely and misclassify them as transcription errors. Equally, it may not recognize legal terminology, such as the use of the word ‘relict’ to mean ‘widow’.

Tailoring AI for Historical Texts

To meet this challenge, we are assessing specialized AI models like MacBERTh, which is trained specifically on early modern English, alongside more general models like RoBERTa, WikiNeural, and Stanford CoreNLP. These models are being validated through a custom-built NLP pipeline. For example, in the case of the 1641 Depositions — a carefully curated collection — we can evaluate how well these tools identify names, places, and events, against the already manually extracted list. The analysed texts are available and were annotated in previously executed projects, in standard formats such as TEI-XML, and a relational database model in SQL, and plain text, allowing for detailed benchmarking.

Let’s look an example based on the same early modern English paragraph that appears in the deposition of Elizabeth Price (Armagh). The images below illustrates which entities each of the AI models currently identify.

Using Stanford Core NLP Toolkit (with the Modernised English Text)

Using BERT (with the Modernised English Text)

Using spCy (with the Early Modern English Text)

Why Reproducibility and Responsibility Matter

In a project-like VOICES, reproducibility, explainability, and accountability are not optional — they are essential. That is why all our AI-powered tasks are designed to be as transparent as possible and collaborative, always with human oversight.

We are not using AI to replace historians. We are using it to amplify forgotten voices — sentence by sentence — in a responsible, reproducible, and ethical way.

By combining cutting-edge AI with the careful attention of historians, the VOICES project continues to shed light on women’s experiences that were overlooked in their time — and might have remained hidden in ours.

Next steps for the People and Places recognition

As the project moves forward, one of our next steps in the natural language processing workflow will involve the use of controlled vocabularies to enhance model performance — especially in handling the complexities of early modern English. Historical texts often contain inconsistent or misspelled versions of names, whether due to variations in spelling conventions or the hand of the original scribe. For example, the name “Elinor” might appear in different documents as “Elynor”, “Elenor”, or even “Ellinore” — all referring to the same individual. By developing curated lists of known names, places, and entities — informed by expert human annotation — we can guide AI models to better recognize these variants. This human-in-the-loop approach not only improves accuracy but also preserves the nuance and diversity of the original language, while making it more accessible to computational analysis.